In this spotlight...

- Introduction & Reflections by James Lester

- Featured DRK-12 Projects

- Assessment of Mathematical Learning in Action in the Game-based Learning Environment – E-Rebuild

- Development and Empirical Recovery for a Learning Progression-Based Assessment of the Function Concept

- Graphing Research on Inquiry with Data in Science (GRIDS)

- Math-Mapper 6-8: A Next Generation Diagnostic Assessment and Reporting System within a Learning Trajectory-Based Mathematics Learning Map for Grades 6-8

- Next Generation Science Assessment Project

- Additional Projects

- Resources & Further Reading

New Measurement Paradigms and the Future of Technology-Enhanced Assessment

James Lester, Director of the Center for Educational Informatics and Distinguished Professor of Computer Science, North Carolina State University.

Research on assessment has experienced a sea change since CADRE published the New Measurement Paradigms report in 2012. Led by Mike Timms (ACER) and coordinated by Amy Busey (EDC) with Doug Clements (University of Denver), Janice Gobert (Rutgers), Diane Jass Ketelhut (University of Maryland), Debbie Reese (Wheeling Jesuit University), Eric Wiebe (North Carolina State University), and myself as collaborators, the report presented a snapshot of measurement methods featured in contemporary DRK–12 and REESE projects. It emphasized measurement methods from projects centering on intelligent learning environments, including methods that built on a strong psychometric foundation to investigate approaches such as embedded assessment and emerging techniques that drew on machine learning. Increasing adaptivity and expanding dimensions of student responses were pervasive themes running through the report, which painted a picture of a creative moment in time for assessment.

The years since the report was issued have been exciting indeed. Perhaps most striking is the astonishing acceleration of advances in AI and the promise it holds for the next generation of assessment. While the New Measurement Paradigms working group was prescient in recognizing machine learning as an enabling technology for assessment, the sheer magnitude of the inferential power that would soon be unleashed was not apparent. All of the cognitive qualities that designers of intelligent systems target—adaptivity, robustness, self-improvement—are now on the table for next-gen assessment.

AI technologies are now opening the door to assessing new kinds of constructs in new kinds of settings with new kinds of frameworks. As an example, my colleagues and I are now investigating how to leverage advances in multimodal learning analytics integrating machine learning and multichannel sensors to assess learners’ naturalistic engagement in museums (DRL-1713545). With a focus on assessing engagement in groups of museum visitors as they interact with science exhibits, we are using deep neural architectures to create predictive models of engagement from multimodal data channels, including facial expression, gesture, posture, and movement. Efforts to build multimodal assessment models designed to operate “in the wild” would have been very hard to imagine just a few years ago.

It seems certain that advances in AI will deeply inform assessment. Explorations of “AI-enhanced assessment” will quickly transition to “AI-driven assessment.” These forays—already underway—will commence as research efforts but will no doubt quickly make their way into the world to support the measurement of a growing constellation of constructs for a broad array of learner populations. As a data-intensive activity, the development of new approaches to assessment will be propelled by the virtuous cycle of large data collections yielding increasingly high-fidelity assessment models. At the same time, it will be imperative for AI-centric assessment frameworks to account for fairness, accountability, and transparency. Nevertheless, with the enormous potential these developments hold for helping learners and improving learning, it seems we may well be entering a golden age for assessment research.

Featured Projects

Assessment of Mathematical Learning in Action in the Game-based Learning Environment – E-Rebuild

Assessment of Mathematical Learning in Action in the Game-based Learning Environment – E-Rebuild

PI: Fenfeng Ke | Co-PIs: Russell Almond, Kathleen Clark, Gordon Erlebacher, and Valerie Shute

Grade Band: Middle

Knowledge/Skills Assessed: Mathematical practices, including: (a) understanding and using ratio reasoning and proportional relationships to solve mathematical problems, (b) solving mathematical problems involving area and volume, and (c) solving mathematical problems using numerical and algebraic expressions.

Innovation in Assessment: In this project, we examine the methods and validity of using evidence-centered design with Bayesian networks and other educational data mining methods to assess mathematical learning in a game-based learning environment called E-Rebuild. Because knowledge is present in what learners do, how they do it, what tools they use, and how they communicate in and about their doing, it is important to assess knowledge production in context and learning in action rather than testing abstracted and isolated skills and understanding. Recording, tracking, and diagnosing the learners’ learning in progress also helps to drive the provision of dynamic and adaptive learning supports to create an autonomous while guided intellectual practice in this game-based learning environment.

Try It: E-Rebuild Mathematics Learning Game

Development and Empirical Recovery for a Learning Progression-Based Assessment of the Function Concept

Development and Empirical Recovery for a Learning Progression-Based Assessment of the Function Concept

PI: Edith Graf | Co-PIs: Gregory Budzban, Robert Moses, Sarah Ohis, and Peter van Rijn

Grade Bands: Middle, High

Knowledge/Skills Assessed: Understanding of the mathematical concept of function.

Innovation in Assessment: The goal of our project, which is a collaborative effort among Educational Testing Service (ETS), The Algebra Project, Southern Illinois University at Edwardsville, and the Young People’s Project, is to validate the interpretation of a learning progression-based assessment for the concept of function in mathematics. Traditionally in their study of functions, students work with supporting representations such as equations, Cartesian graphs, and tables of values. Our technology-enhanced assessment includes all of these response types. However, we also focus on finite-to-finite functions and their supporting representations, including directed graphs, arrow diagrams, and matrices. The technology allows students to interact with both traditional and finite-to-finite representations, and we are working on automated scoring methods. The tasks are challenging and intended to engage all learners, including those who perform in the bottom quartile on summative assessments. To equitably assess student understanding of the concept of function, we incorporated student voice into the design through the use of focus groups and cognitive interviews. We situated many of the tasks in real world contexts, and the students provided feedback about language and relatability. To ensure that the assessment is used with a sufficient number of students performing in the lowest quartile, partnerships have been established with a number of teachers and their classrooms around the country. As part of this arrangement the teachers receive professional development support on curriculum units directly related to the finite-to-finite function concept. In addition, we developed tutorials for the interactive tools that students can use prior to taking the assessment.

Graphing Research on Inquiry with Data in Science (GRIDS)

PI: Marcia Linn | Co-PIs: Elizabeth Gerard, Ou Liu

Grade Band: Middle

Knowledge/Skills Assessed: GRIDS investigates strategies for supporting middle school students in interpreting and using graphs to improve their science learning and understanding.

Innovation in Assessment: The Thermodynamics Challenge curriculum unit encourages students to use an experimentation model to explore their ideas about thermodynamics and to evaluate the insulation effectiveness of different materials by analyzing graphs. The model functions as an interactive space for students to choose and test their own prior ideas, and to reason and make sense about graphical data.

The model’s user interface explicitly promotes students in more self-directed, reflective and deliberate experimentation, and aims to engage students in three scientific practices: planning and carrying out an investigation, investigating ideas and explanations, and analyzing and interpreting data. The innovative design feature that supports students with these activities is the experimentation matrix , which allows students to track and manage their experimentation with the model. Students use the matrix to discuss and prioritize tests (planning and investigating ideas; the matrix records students’ preferred tests as “starred” experiments, and the model provides automated feedback to guide students’ experimentation planning). Students then use the matrix to select tests to run with the model (carrying out an investigation) and finally, students use the matrix to select specific tests for scaffolded analysis and sense-making (interpreting data).

The design of the experimentation matrix encourages student pairs to negotiate decisions together (e.g., which experimental tests to star during planning; which tests to run with the model). Thus the matrix can help to make student thinking visible and available for refinement by providing an accessible, shared learning space for continual discussion, the negotiation of experimentation decisions, and sense-making.

Try It: Web-Based Inquiry Science Environent (WISE)

Math-Mapper 6-8: A Next Generation Diagnostic Assessment and Reporting System within a Learning Trajectory-Based Mathematics Learning Map for Grades 6-8

Math-Mapper 6-8: A Next Generation Diagnostic Assessment and Reporting System within a Learning Trajectory-Based Mathematics Learning Map for Grades 6-8

PI: Jere Confrey

Grade Band: Middle

Knowledge/Skills Assessed: Math Mapper 6-8 (MM) is organized around a learning map of nine big ideas, 25 relational learning clusters, and 62 constructs. Each construct delineates a learning trajectory (LT) based on a synthesis of the related empirical research on student learning. MM is based on the idea of a LT as a research-based model of how students’ thinking increases in sophistication relative to a domain-specific concept, in the context of instruction that is operationalized through the use of digitally-administered and scored diagnostic assessments, which return data to students and teachers in real time. The MM assessments consist of items that are aligned with the levels of the LTs, written by the team in consultation with inservice teachers, and designed to measure students' understanding of each level of a construct by asking questions that require deep and critical thinking. Thus, students need to demonstrate their knowledge, comprehension, and application of a construct, rather than their replication of a memorized procedure. The MM system also notes each construct’s relationships to the Common Core State Standards for middle school math (CCSS-M), as well as common student misconceptions.

Innovation in Assessment: Dynamic data reports from Math Mapper are instantly reported back to students and teachers. These reports provide comprehensive, personalized information that can be used to identify the specific needs of students as they work to deepen their mathematical knowledge and understanding. As such, this personalized data helps identify all students’ mathematical strengths and challenges, no matter where they are in their academic journeys, and can be particularly useful in ensuring students are no longer unseen or under-served.

- Students get a report for each cluster that goes beyond percent correct - with their MM data, students can see how they are progressing along the LTs. From here, students can view an item matrix and access common misconceptions, choose to revise their work, or solve related practice problems. These reports arm students with the information they need in order to identify their own strengths and areas for improvement, and encourage a growth mindset by providing tools to address their own knowledge gaps.

- Teachers can immediately use these valid and reliable data formatively to efficiently drive their instructional decisions, such as creating meaningful and flexible student workgroups based on whole-class MM reports. These data can inform the teachers’ short-term adjustments (e.g. decide how to provide additional support in the moment in order to address in students’ gaps in knowledge), and their long-term adjustments (e.g. adjust their initial instruction in the following year, with the new group of students, in order to address common gaps or misconceptions throughout the initial instruction of the content).

Try It: Math-Mapper 6-8 | Learn More

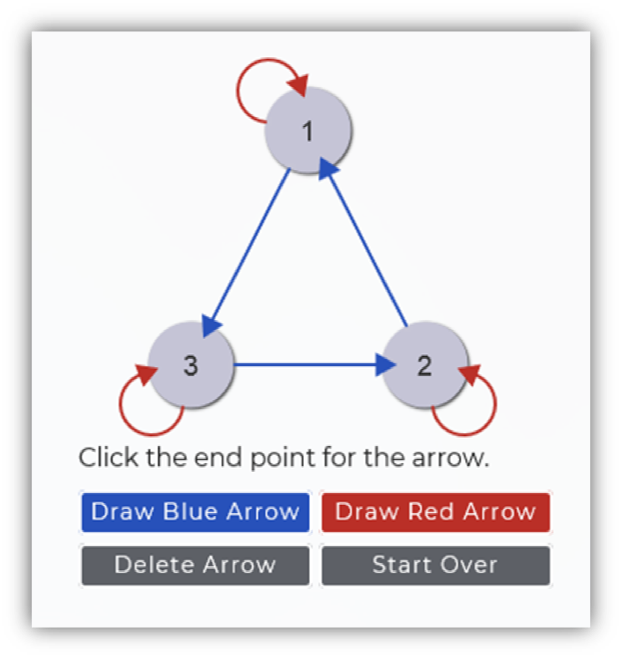

Next Generation Science Assessment Project

Next Generation Science Assessment Project

PIs: Christopher Harris, Joseph Krajcik, Kevin McElhaney, James Pellegrino | Co-PIs: Louis DiBello

Grade Band(s): Middle

Knowledge/Skills Assessed: The assessment tasks and accompanying scoring rubrics measure integrated, three-dimensional competencies aligned to middle school performance expectations in physical and life sciences from the Next Generation Science Standards (NGSS).

Innovation in Assessment: Our collaborative project has generated technology-enhanced assessment resources that teachers and other educators can draw upon to support NGSS-aligned classroom instructional practice and professional learning for middle school Physical and Life Sciences performance expectations (NSF: #1903103, 1316903, #1316908, #1316874; Moore Foundation: #4482). An evidence-centered design approach was used to design a suite of tasks, featuring simulations, drawing tools, and other media, through which students can demonstrate competency in the knowledge, skills, and abilities underlying the NGSS PEs. The tasks are also designed to increase access and engagement for students with a range of ethnic and cultural backgrounds, linguistic abilities, and learning differences. For example, NGSA tasks feature visual aids and multiple means of demonstrating understanding to support students who may struggle to access, comprehend, and respond to traditional, text-based assessments (e.g., English language learners). An online delivery platform allows us to take advantage of web-based tools to integrate computational models, which students can manipulate to explore phenomena, to generate data for a scientific argument, or to carry out an experiment. Teachers can select tasks to use with their students and assign them to be completed individually, in small groups, or during whole class instruction. Further, as students complete the assessment tasks online, teachers can monitor student progress in real-time. Importantly, the assessment portal links scoring rubrics to tasks to help teachers understand and evaluate which aspects of student work show progress toward mastery of the targeted 3-D integrated knowledge. Using these rubrics, teachers can provide online feedback to individual students.

Try It: 100+ Free, Online Assessment Tasks | Publications & Presentations | Learn More

Additional Projects

CAREER: Scaffolding Engineering Design to Develop Integrated STEM Understanding with WISEngineering

CodeR4STATS - Code R for AP Statistics

Connected Biology: Three-Dimensional Learning from Molecules to Populations (Collaborative Research: Reichsman, White)

Development of the Electronic Test of Early Numeracy

DIMEs: Immersing Teachers and Students in Virtual Engineering Internships

Engaging High School Students in Computer Science with Co-Creative Learning Companions

Enhancing Middle Grades Students' Capacity to Develop and Communicate Their Mathematical Understanding of Big Ideas Using Digital Inscriptional Resources (Collaborative Research: Dorsey, Phillips)

Fostering Collaborative Computer Science Learning with Intelligent Virtual Companions for Upper Elementary Students (Collaborative Research: Boyer, Wiebe)

Guiding Understanding via Information from Digital Environments (GUIDE)

Ramping Up Accessibility in STEM: Inclusively Designed Simulations for Diverse Learners

SimScientists Assessments: Physical Science Links

SmartCAD: Guiding Engineering Design with Science Simulations (Collaborative Research: Chiu, Magana-de-Leon, Xie)

Strengthening the Quality, Design and Usability of Simulations as Assessments of Teaching Practice

Supporting Teacher Practice to Facilitate and Assess Oral Scientific Argumentation: Embedding a Real-Time Assessment of Speaking and Listening into an Argumentation-Rich Curriculum (Collaborative Research: Greenwald, Henderson)

Supporting Teachers in Responsive Instruction for Developing Expertise in Science (Collaborative Research: Linn, Riordan)

Video in the Middle: Flexible Digital Experiences for Mathematics Teacher Education